The SuperStorage 6019P-ACR12L+ is a 1U server designed for organizations that need a solution for high-density object storage, scale-out storage, Ceph/Hadoop, and Big Data Analytics. This server is highlighted by Supermicro’s X11-DDW-NT motherboard family, which features support for dual socket 2nd generation Intel Xeon Scalable processors (Cascade Lake), up to 3TB of ECC DDR4-2933MHz RAM, and Intel Optane DCPMM. This means higher frequency, more cores at a given price, and more in-processor cache, all of which promote higher performance. As such, businesses will reap higher performance for the same price, better price for the same performance, or better performance at lower costs compared to previous CLX servers.

The SuperStorage 6019P-ACR12L+ is a 1U server designed for organizations that need a solution for high-density object storage, scale-out storage, Ceph/Hadoop, and Big Data Analytics. This server is highlighted by Supermicro’s X11-DDW-NT motherboard family, which features support for dual socket 2nd generation Intel Xeon Scalable processors (Cascade Lake), up to 3TB of ECC DDR4-2933MHz RAM, and Intel Optane DCPMM. This means higher frequency, more cores at a given price, and more in-processor cache, all of which promote higher performance. As such, businesses will reap higher performance for the same price, better price for the same performance, or better performance at lower costs compared to previous CLX servers.

The SuperStorage 6019P-ACR12L+ is a 1U server designed for organizations that need a solution for high-density object storage, scale-out storage, Ceph/Hadoop, and Big Data Analytics. This server is highlighted by Supermicro’s X11-DDW-NT motherboard family, which features support for dual-socket 2nd generation Intel Xeon Scalable processors (Cascade Lake), up to 3TB of ECC DDR4-2933MHz RAM, and Intel Optane DCPMM. This means higher frequency, more cores at a given price, and more in-processor cache, all of which promote higher performance. As such, businesses will reap higher performance for the same price, better price for the same performance, or better performance at lower costs compared to previous CLX servers.

For storage, the 6019P-ACR12L+ can be outfitted with 12x 3.5″ HDD bays, four 7mm NVMe SSD bays and an M.2 NVMe SSD as a boot drive. Supermicro leverages the 10GbE on board and three expansion card slots for faster NICs (1x HHHL, 2x FHHL) so users can get the best possible performance out of their drives. Connectivity includes three RJ45 LAN ports (two of which are 10GBaase-T and one a dedicated IPMI), four USB 3.0 and two USB 2.0 ports, one VGA port and a TPM header.

There are a few minor differences between the plus and non-plus model. For example, the 6019P-ACR12L+ is equipped with 3x PCI-E 3.0 x16 slots, while the non-plus version has two PCI-E 3.0 x16 slots and one PCI-E 3.0 x8 LP slot. Moreover, the plus model features 800W redundant PSU versus a 600W featured inside the non-plus. The plus model also has front LEDs for drive activity.

We did an unboxing, overview video of the SuperStorage 6019P-ACR12L+ here:

Our build is comprised of 12 x 16GB of DDR4 RAM (for a total of 192GB), Samsung PM983 NVMe SSDs (four 3.86TB, one 960GB) and 12 x 12TB Seagate Exos HDDs. The Samsung PM983 SSDs included as part of this evaluation offer a 1.3DWPD endurance rating, which focuses more on read performance than write performance.

Supermicro SuperStorage 6019P-ACR12L+ Specifications

| CPU |

|

|

| Cores |

|

|

| System Memory | ||

| Memory Capacity |

|

|

| Memory Type |

|

|

| Note | 2933MHz in two DIMMs per channel can be achieved by using memory purchased from Supermicro Cascade Lake only. Contact your Supermicro sales rep for more info. |

|

| On-Board Devices | ||

| Chipset |

|

|

| SATA |

|

|

| Network Controllers |

|

|

| IPMI |

|

|

| Video |

|

|

| Input / Output | ||

| LAN |

|

|

| USB |

|

|

| Video |

|

|

| TPM |

|

|

| System BIOS | ||

| BIOS Type |

|

|

| Management | ||

| Software |

|

|

| Power Configurations |

|

|

| PC Health Monitoring | ||

| CPU |

|

|

| FAN |

|

|

| Temperature |

|

|

| Chassis | ||

| Form Factor |

|

|

| Model |

|

|

| Dimensions and Weight | ||

| Width |

|

|

| Height |

|

|

| Depth |

|

|

| Weight |

|

|

| Available Colors |

|

|

| Front Panel | ||

| Buttons |

|

|

| LEDs |

|

|

| Expansion Slots | ||

| PCI-Express |

|

|

| Drive Bays | ||

| Hot-swap |

|

|

| System Cooling | ||

| Fans |

|

|

| Power Supply | ||

| 800W Redundant Power Supplies with PMBus | ||

| Total Output Power |

|

|

| Dimension (W x H x L) |

|

|

| Input |

|

|

| +12V |

|

|

| +5Vsb |

|

|

| Output Type |

|

|

| Certification | Platinum Level | |

| Operating Environment | ||

| RoHS |

|

|

| Environmental Spec. |

|

|

Design and build

The SC802TS-R804WBP is a 1U chassis under 2-inches tall, 18-inches wide and just over 37 inches in depth. With its toolless rail system design, the chassis can mount into the server rack without to use of any tools. It leverages locking mechanisms on each end of the rails, which lock onto the square mounting holes located on the front and back of a server rack.

On the front of the server is a control panel, which features a power on/off and reset button, as well as five LEDs: Power, HDD, 2x NIC, and information status indicators. Connectivity on the front includes two USB 2.0 ports. Running along the bottom of the front panel are the four hot-swap 2.5-inch bays for NVMe/SATA drives.

Also, on the front panel are two locking levers. Simply loosen the two thumb screws then rotate the levers counter clockwise to unlock and clockwise to lock the drawer. If you pull the two levers at the same time, the internal drive drawer will pop out.

On the middle part of back panel, there are three LAN ports (2 RJ45 10GBase-T LAN and 1 RJ45 Dedicated IPMI LAN), one VGA port and one TPM header. On the left side are two 800W PSUS, while the right side houses three PCIe expansion slots.

Performance

VDBench Workload Analysis

When it comes to benchmarking storage arrays, application testing is best, and synthetic testing comes in second place. While not a perfect representation of actual workloads, synthetic tests do help to baseline storage devices with a repeatability factor that makes it easy to do apples-to-apples comparison between competing solutions. These workloads offer a range of different testing profiles ranging from “four corners” tests, common database transfer size tests, as well as trace captures from different VDI environments. All of these tests leverage the common vdBench workload generator, with a scripting engine to automate and capture results over a large compute testing cluster. This allows us to repeat the same workloads across a wide range of storage devices, including flash arrays and individual storage devices.

Profiles:

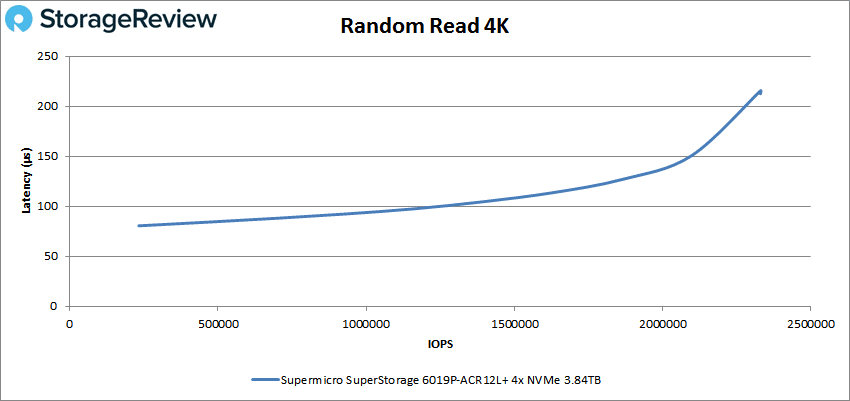

- 4K Random Read: 100% Read, 128 threads, 0-120% iorate

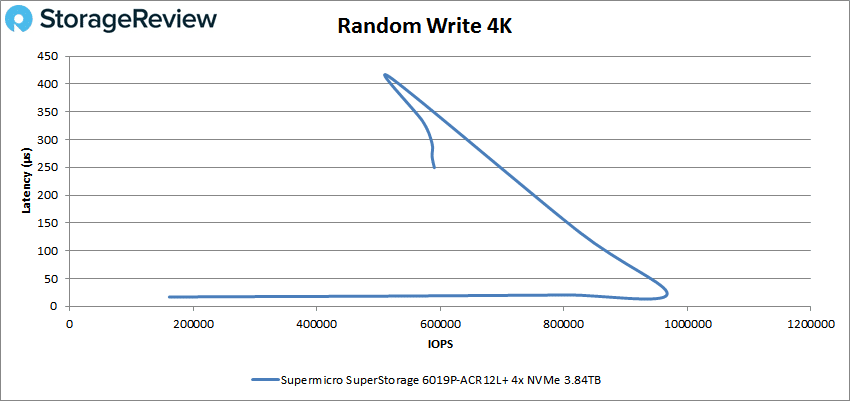

- 4K Random Write: 100% Write, 128 threads, 0-120% iorate

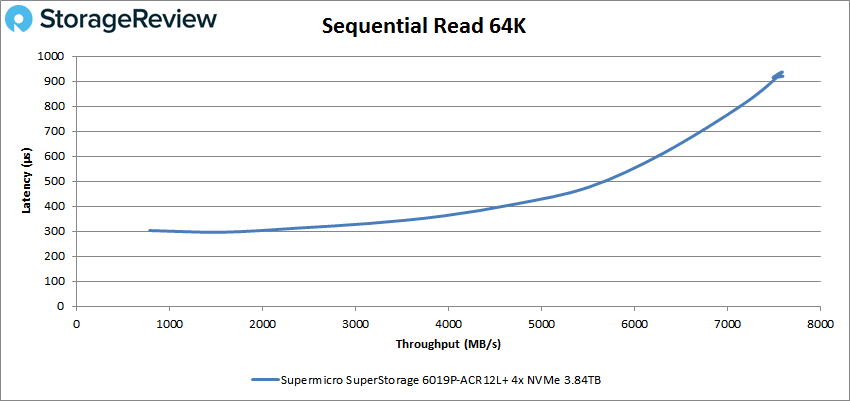

- 64K Sequential Read: 100% Read, 32 threads, 0-120% iorate

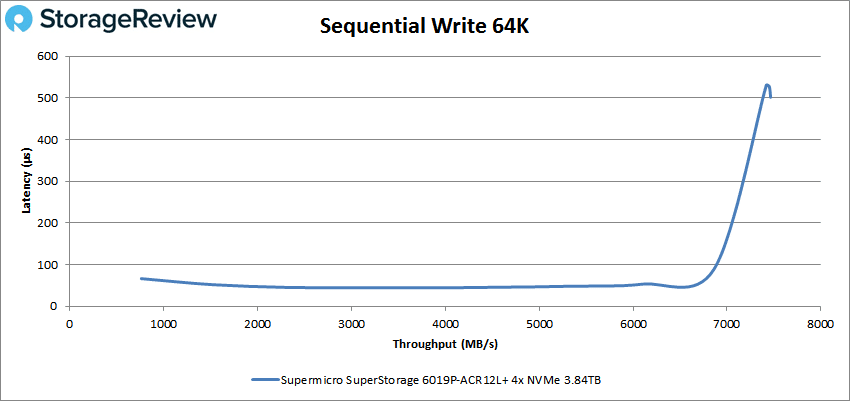

- 64K Sequential Write: 100% Write, 16 threads, 0-120% iorate

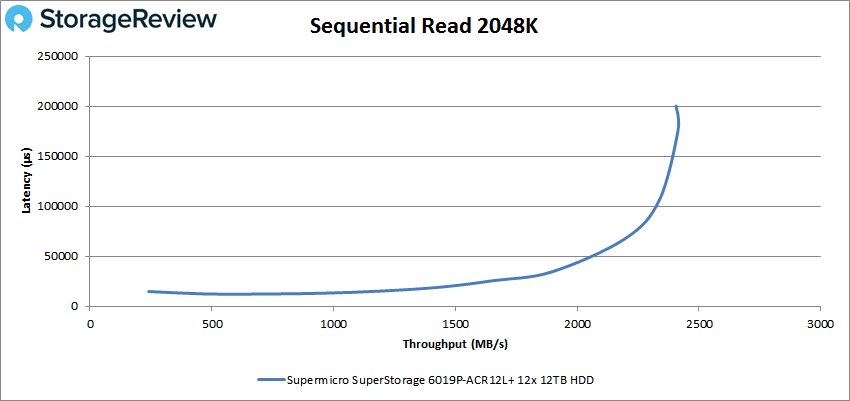

- 2048K Sequential Read: 100% Read, 24 threads, 0-120% iorate

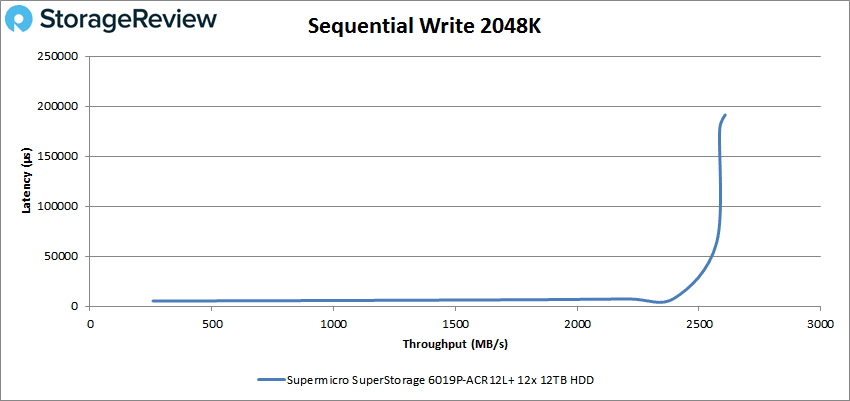

- 2048K Sequential Write: 100% Write, 24 threads, 0-120% iorate

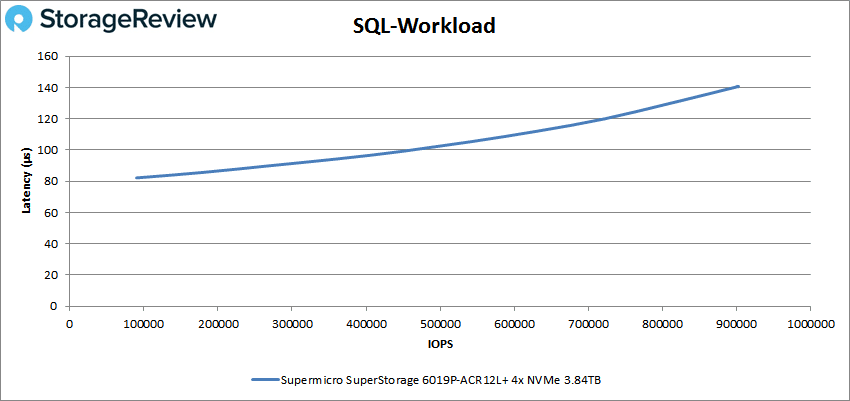

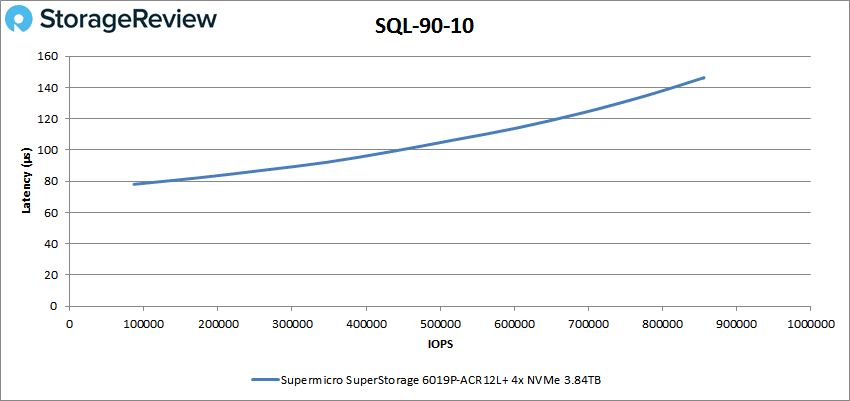

- Synthetic Database: SQL and Oracle

- VDI Full Clone and Linked Clone Traces

Looking at random 4K read, the SuperStorage 6019P-ACR12L+ recorded sub-millisecond latency throughout starting at 233,047 IOPS at 80.5μs while peaking at 2,330,576 IOPS with 215.5μs latency.

For random 4K write, the server began 161,323 IOPS with just 16.8μs latency and was able to maintain this low latency until roughly 970,000 IOPS at a 100% workloads, where it then spikes to 411.8ms in latency and scales back to 571 K IOPS at an over-saturation to 110% and 120% loads. This performance attribute is mostly related to the type of drives the PM983 are, measuring in at 1.3DWPD of endurance.

Next, we move on to sequential work. In 64K sequential read, the 6019P-ACR12L+ started at 12,593 IOPS or 787MB/s with a latency of 303.1μs before going on to peak at 121,252 IOPS or 7.58GB/s with a latency of 931μs.

For 64K sequential write, the SuperStorage server began at 12,202 IOPS or 763MB/s at 53.2μs latency. The SuperStorage server then peaked at roughly 119K IOPS or 7.45GB/s at 526μs latency.

Measuring the raw performance of the 12 7.2K hard drives inside the server, we applied a 2048K sequential read workloads on the 6019P-ACR12L+. Performance started at 121 IOPS or 241MB/s at 14,812μs latency. The SuperStorage server then peaked at roughly 1,202 IOPS or 2,405MB/s at 200,223μs latency.

For 2048K sequential write on the hard drives, the 6019P-ACR12L+ started at 129 IOPS or 259MB/s at 5,360μs latency. The SuperStorage server then peaked at roughly 1,303 IOPS at or 2,607MB/s 191,420μs latency.

Our next set of tests are our SQL workloads: SQL, SQL 90-10, and SQL 80-20. Starting with SQL, the 6019P-ACR12L+ peaked at 902,130 IOPS with a latency of only 140.7μs.

For SQL 90-10, the SuperStorage server started at 86,588 IOPS with a latency of 78.1μs and peaked at 855,814 IOPS with 146.3μs in latency.

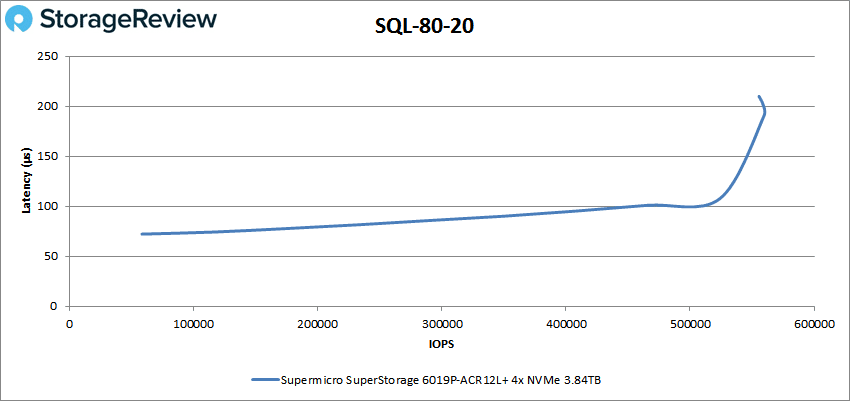

SQL 80-20 had the 6019P-ACR12L+ started at 58,234 IOPS with 72.2μs in latency while peaking at 555,565 IOPS with 210μs in latency.

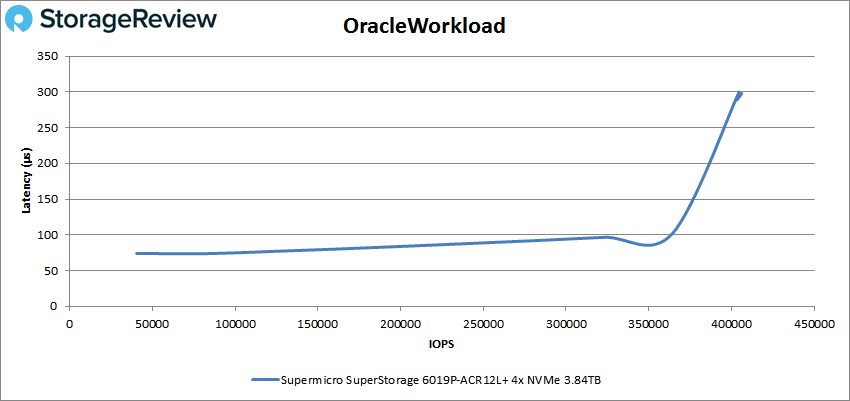

Next up are our Oracle workloads: Oracle, Oracle 90-10, and Oracle 80-20. Starting with Oracle, the 6019P-ACR12L+ started at 74.2μs latency while peaking at 406,222 IOPS with a latency of only 298μs.

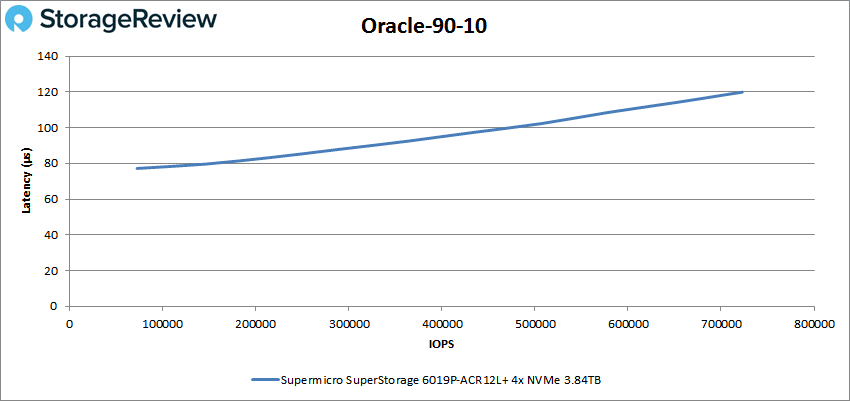

Looking at Oracle 90-10, the SuperStorage server started at 72,398 IOPS with a latency of 77.2μs and peaked at 722,830 IOPS with 120μs in latency.

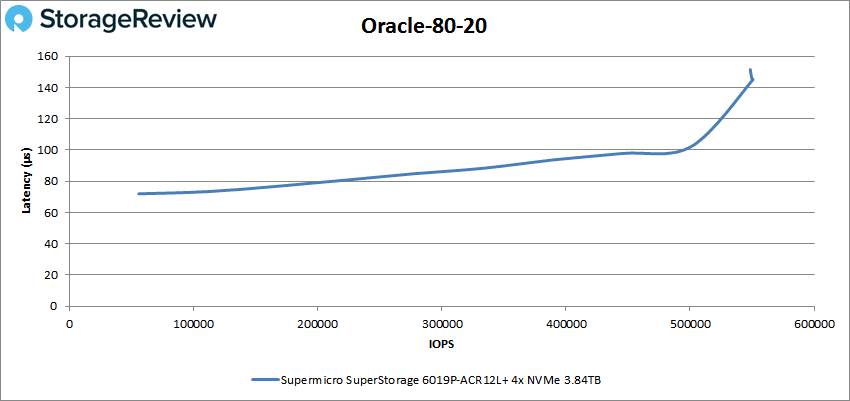

With Oracle 80-20, the 6019P-ACR12L+ began at 66,807 IOPS and a latency of 92μs, while peaking at 677,406 IOPS and a latency of 129μs.

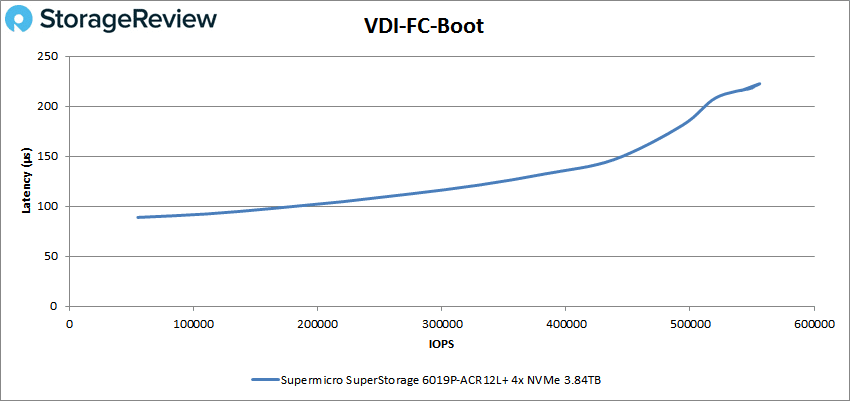

Next, we switched over to our VDI clone test, Full and Linked. For VDI Full Clone (FC) Boot, the SuperStorage 6019P-ACR12L+ began at 54,918 IOPS and a latency of 88.9μs and peaked at 556,089 IOPS at a latency of 222.5μs.

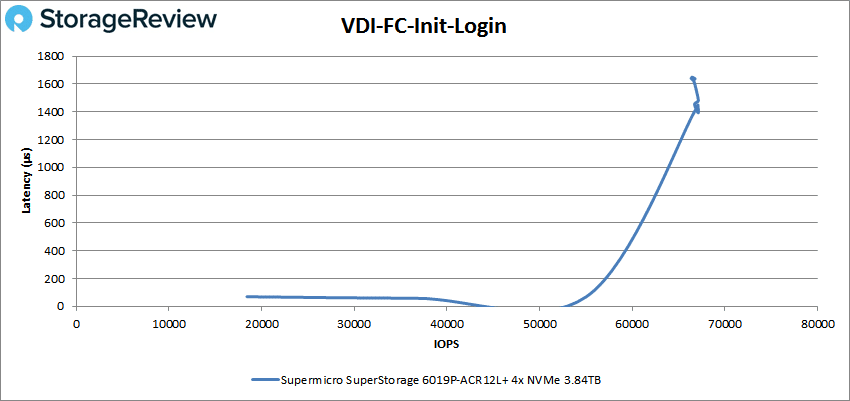

Looking at VDI FC Initial Login, the SuperStorage server started at 18,409 IOPS and 69.3μs latency, hitting a peak at 66,668 IOPS at 1,636μs.

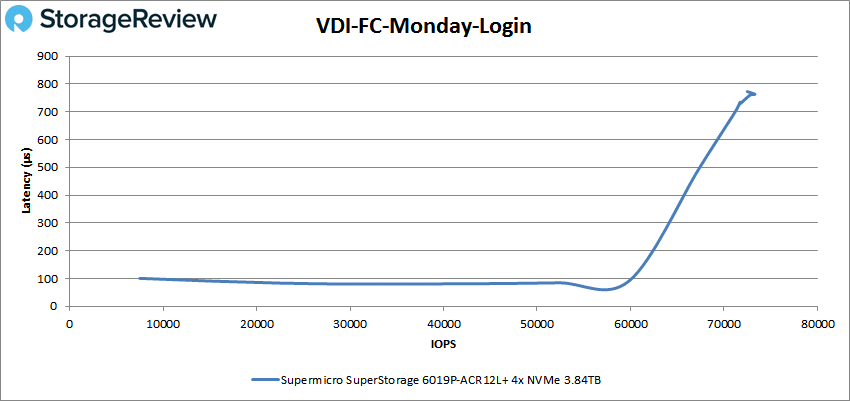

VDI FC Monday Login saw the server start at 7,499 IOPS and 100.7 μs latency with a peak of 73,301 IOPS at 763μs.

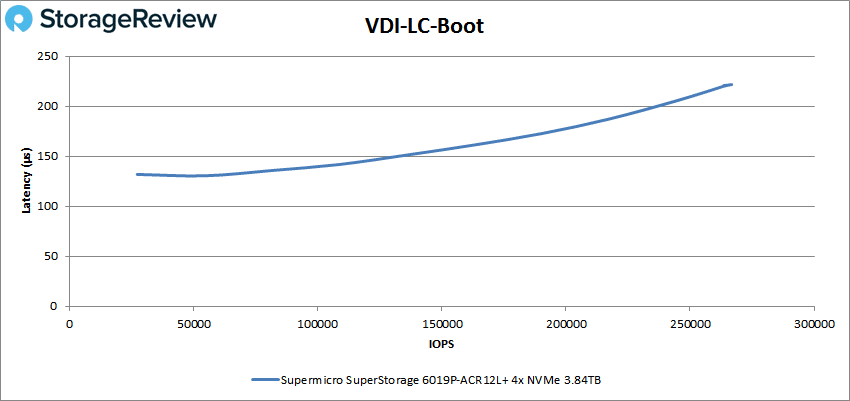

For VDI Linked Clone (LC) Boot, the 6019P-ACR12L+ began at 27,200 IOPS with 131.9μs latency and peaked at 266,391 IOPS at 221.5μs.

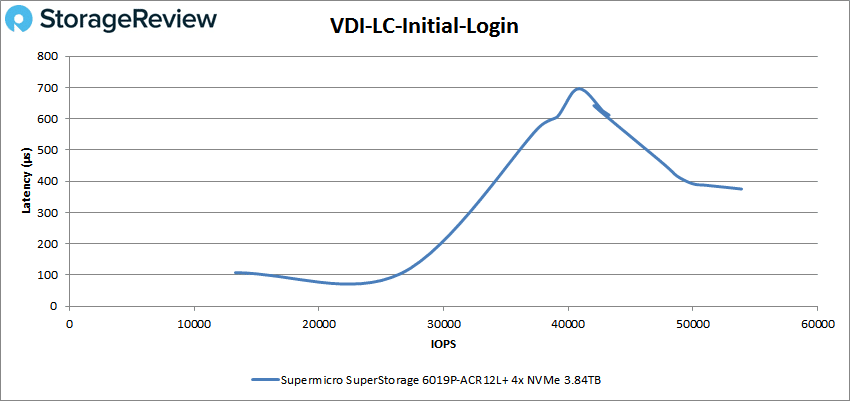

Looking at VDI LC Initial Login, the 6019P-ACR12L+ began at 13,298 IOPS with 107.8μs latency and peaked at 53,900 IOPS at 375.5μs.

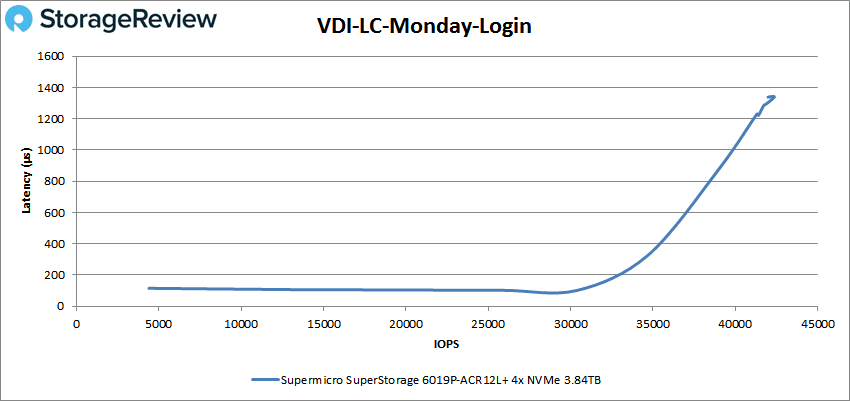

Finally, VDI LC Monday Login had the 6019P-ACR12L+ start at 4,398 IOPS and 115.7μs latency then peaking at 42,381 IOPS at 1,340.3μs.

Conclusion

Supermicro’s SuperStorage 6019P-ACR12L+ is designed for high-density object storage, scale-out storage, Ceph/Hadoop, and Big Data Analytics. For hardware the server supports dual socket 2nd generation Intel Xeon Scalable processors (Cascade Lake), up to 3TB of ECC DDR4-2933MHz RAM (and/or Intel Optane DCPMM) and on the storage side it can house up to twelve 3.5″ HDD bays, four 7mm NVMe SSD bays and an M.2 NVMe SSD for boot. All of this hardware fits in the server’s 1U form factor. For networking, the SuperStorage 6019P-ACR12L+ has 10GbE on board and comes with several expansion slots for more cards.

For performance we ran our VDBench Workload Analysis. Here the Supermicro SuperStorage 6019P-ACR12L+ had some fairly good peak numbers. Peak highlights for the flash drives include 2.3 million IOPS for 4K read, for 4K write saw 970K IOPS, 64k sequential read had 7.58GB/s read and 6.46GB/s write. For the spinning media we ran a 2048K sequential benchmark hitting about 2.45GB/s read and 2.6GB/s write. With our SQL workloads the sever saw peaks of 902K IOPS, 856K IOPS for 90-10, and 555K IOPS for 80-20. With Oracle we saw peaks of 406K IOPS, 723K IOPS 90-10, and 677K IOPS for 80-20. The sever continued to do well as we moved into our VDI clone test. For Full Clone we saw peaks of 556K IOPS boot, 67K IOPS Initial Login, and 73K IOPS for Monday Login. For Linked Clone we saw 266K IOPS for boot, 54K IOPS for Initial Login, and 42K IOPS for Monday Login.

The Supermicro SuperStorage 6019P-ACR12L+ is a 1U server that can pack in quite a bit of storage and connectivity while helping users as they tangle with Big Data issues. Webscale organizations will like the combination of flash and HDD capacity this unit offers and software defined storage guys will be able to leverage automated tiering capabilities to deliver flash-based performance over 144TB (or more) of HDD capacity. In all the design of the server is quite novel, entirely different from what most other vendors are doing with 1U. It may not be for everyone, but for those who have the capability to take advantage of the storage performance and flexibility this server offers, Supermicro has created a really compelling offering.

Supermicro SuperStorage 6019P-ACR12L+

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | Facebook | RSS Feed

The post Supermicro SuperStorage 6019P-ACR12L+ Server Review appeared first on StorageReview.com.

For over 20 years, AIC has been a provider of both OEM/ODM and COTS server and storage solutions. Headquartered in Taiwan, and founded in 1996, AIC has decades of experience in creating products that are flexible and configurable. They are extending this expertise to new HCI with the new series announced here.

For over 20 years, AIC has been a provider of both OEM/ODM and COTS server and storage solutions. Headquartered in Taiwan, and founded in 1996, AIC has decades of experience in creating products that are flexible and configurable. They are extending this expertise to new HCI with the new series announced here.